I’ve stopped thinking about this as “AI vs humans.”

The more time I spend with real systems and real teams, the more obvious it feels: the interesting story is humans + AI as one thing. Not a replacement. Not a toy on the side. A combined system that, if we design it well, is actually more capable than anything we’ve had in any previous era.

That sounds lofty, but let me ground it.

The First Time I Felt “Smaller” Than My Tools

If you’ve ever stared at a wall of logs at 2 a.m., you know the feeling. You’re tired. Something critical is down. The dashboards look like a Christmas tree. You’re trying to hold:

- partial telemetry

- an angry customer in the back of your mind

- past incidents that “feel” similar

- three or four theories about what might be broken

…all at once in your head.

The limit isn’t your willingness to think.The limit is how much you can juggle.

Historically, that’s where we stopped. You build better dashboards, you write better runbooks, you do a postmortem and promise to “learn from it” next time. Then the next weird incident hits and you repeat the cycle with slightly better muscle memory.

Today, there’s a new option: you can offload part of that mess to an AI that never gets tired, never forgets, and can search your entire operational history in seconds.

The first time I let an AI system sit in the middle of that 2 a.m. triage flow, I had a weird emotional reaction. Part of me felt smaller than my tools. This thing could see more, recall more, and enumerate more paths than I ever could.

But after the initial ego hit, something else showed up: relief.

Because my job shifted. I wasn’t the human log parser anymore. I was the one deciding which of those AI-generated paths was acceptable for this network, this customer, this situation.

That’s the moment I started to think of human + AI as one composite operator.

What Humans Still Do Best

It’s tempting to downplay our side of the equation. The models are big and impressive, and we all see their failures, so it’s natural to imagine a future where they “close the gap” and we become optional.

I don’t buy that.

When I look at actual work – supporting networks, building products, running a business – the uniquely human parts are still the ones that make or break outcomes:

- We care about things. Outages aren’t just numbers to us. They’re patients waiting on results, customers losing trust, teams losing sleep. That gives our decisions weight.

- We understand context. We know which client is in the middle of a renewal, which region is politically sensitive, which workaround already burned goodwill last quarter.

- We handle ambiguity. We’re used to choosing between “less bad” options when data is incomplete and every path has trade-offs.

- We own responsibility. When something goes wrong, a human’s name is on it. That changes how we think.

- We read people. We can tell when a customer is worried but not saying it, when a teammate is overwhelmed, when “technically correct” is the wrong move for the relationship.

Models can mimic pieces of this in text, but they don’t live any of it. Emotion, judgment, and accountability are still deeply human.

What AI Brings That We Never Had Before

At the same time, pretending AI is just a fancy autocomplete is equally wrong. If you strip away the hype and look at what these systems actually do well, they’re giving individual people access to capabilities that used to require entire teams:

- AI can read more than we ever will.

Logs, traces, documentation, previous incidents, tickets, emails – all ingested, indexed, and cross-referenced. - AI doesn't forget.

That obscure edge case from two years ago? The AI can bring it back instantly when it matches today’s problem. - AI can search exhaustively.

They can try hundreds of “what if we…” branches in parallel and give you the three most promising ones to think about. - AI can shift roles on demand.

In one hour they’re an analyst summarizing a pattern; the next they’re a junior developer drafting a fix; the next they’re drafting a customer-friendly summary of what happened.

It’s like walking around with an infinitely patient, reasonably smart apprentice who also happens to have photographic memory and ridiculous pattern recognition. Historically, if you wanted that, you built hierarchies. You hired more people. You added layers and roles and coordination overhead. Now one person with a good AI setup can do work that would have taken a small team a decade ago.

Designing the Human+AI Pair

This is where it gets interesting for me. The real leverage doesn’t come from “using AI.” It comes from being intentional about how the human and the AI fit together. On the product support / network intelligence work I’m involved in, the target picture looks something like this:

- The AI sits in the middle of the telemetry, case history, knowledge base, and tooling.

- When an incident hits, it surfaces likely correlations, similar past incidents, and plausible next steps.

- The human operator uses that as a starting point, not an answer sheet.

In practice, that means:

- The AI is responsible for breadth and speed.

“Here are three patterns I’ve seen in the last year that look like this.”

“Here are the subsystems that changed in the last 24 hours.”

“Here are two prior fixes that align with these symptoms.” - The human is responsible for meaning and consequence.

“This customer is in the middle of a go-live; we’ll take the slower, safer path.”

“We’ve seen this pattern before, but this time the stakes are higher.”

“We technically can do X, but we promised we wouldn’t.”

The unit of work is no longer “what the engineer can hold in their head.” It’s “what the engineer and AI can hold together.”

You start to get a new kind of operator:

- They’re less of a human parser.

- They’re more of a decision-maker, teacher, and orchestrator.

They’re partnering with a system that can see more than they can, but that still needs their sense of what’s appropriate, what’s valuable, and what’s safe.

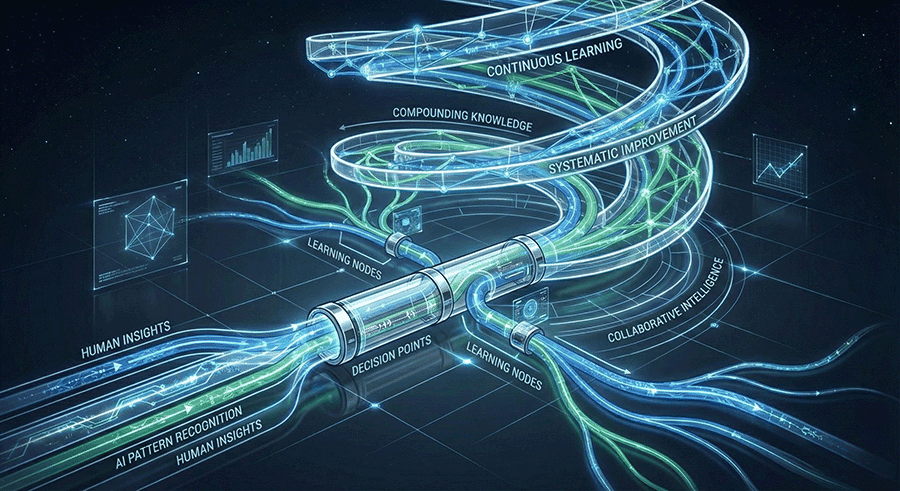

Turning Every Incident Into Shared Learning

The other half of the story is what happens after the incident.

Historically, learning has been leaky:

- The individual learns (“I’ll remember that next time”).

- Maybe the team learns if the postmortem is good and people read it.

- The system itself rarely learns; at best, someone updates a runbook.

With AI in the loop, we can do better – if we design for it. Imagine a world where:

The triage session is captured as a case trace: what we saw, what we considered, what we tried, what worked, and why.

The AI helps structure that trace: “this symptom → this hypothesis → this test → this outcome.” It flags where the human overrode a suggestion and records the rationale.

Those traces feed multiple layers:

- Better internal documentation.

- Better evaluation sets for future models.

- Carefully curated examples for retraining or fine-tuning.

Now “learning together” is literally:

- Humans learning faster because the AI is a tutor and mirror.

- AI systems improving because humans keep feeding them explained examples of real decision-making in context.

We’re not giving the models free rein to mutate themselves. We’re building a pipeline where human experience, human judgment, and AI patterning all compound over time.

A Different Kind of Strong

If you zoom out historically, humans have always used tools to extend themselves.

- We built machines to make our muscles stronger.

- We built engines to move us farther and faster than our legs ever could.

- We built computers to calculate beyond what our working memory could handle.

AI is just the next step in that story – but with a twist. This time we’re extending our thinking, not just our bodies.

That can be scary. It can feel like a threat.

But when I’m in the middle of real work – supporting a system, making a product decision, trying to connect dots across too much information – I don’t feel replaced. I feel like I finally have tools that match the complexity of the problems.

On my own, I’m still bounded by my memory, my energy, my attention span. With a well-integrated AI partner, those limits move.

- I can consider more options before deciding.

- I can pull more history into the present without drowning in it.

- I can spend more of my time on “what should we do?” instead of “what’s even going on?”

That doesn’t make me less human. It makes me a different kind of human than I would’ve been twenty years ago – one whose natural strengths are amplified by a reasoning engine that never sleeps.

And I think that’s the real story here.

Not “Will AI become like us?” Not “Will it take our jobs?”

But:

How do we shape this combined human+AI system so that we, as humans, become more capable, more thoughtful, and more effective than any generation before us?

That’s the question I’m interested in.

And on projects like the network intelligence work I’m doing now, that’s exactly what we’re trying to build: an environment where humans and AI don’t compete, they cooperate – so that every incident, every decision, every messy real-world problem makes both sides a little bit stronger.